The Overlooked Power of Paid Search for Manufacturers

Read Storyby RefractROI

It’s not very often that people receive e-mails from Google itself. But, when they do, the content is sure to have a drastic effect on the nature of search. So, imagine the morning – near the end of July – when everyone checked their inboxes and found a warning, an alert against oncoming negative rankings if they don’t act soon.

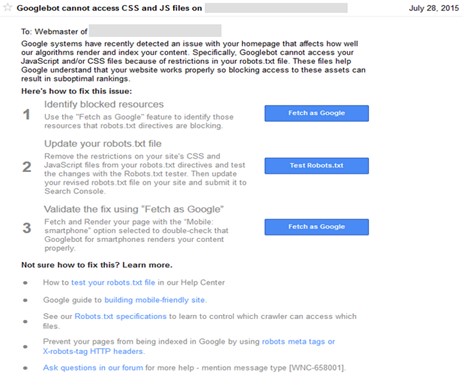

Denver SEO companies received the e-mail, referred to as [WNC-658001], in regard to websites continually blocking CSS and JavaScript on their websites. The warning also appeared as an alert on the Google Search Console. Here’s a sample of the notice webmasters received on that day.

The alert was actually a follow-up to an earlier statement made the previous year about a change in webmaster guidelines that will help the search engine find, index, and rank a website.

The most significant change – for webmasters at least – is the first bullet point of the new technical guidelines.

Formerly, the guidelines advised webmasters to block features such as CSS and JavaScript to allow the spiders crawl the website more effectively. According to the old guidelines, webmasters could best test their sites if they:

“Use a text browser such as Lynx to examine your site, because most search engine spiders see your site much as Lynx would. If fancy features such as JavaScript, cookies, session IDs, frames, DHTML, or Flash keep you from seeing all of your site in a text browser, then search engine spiders may have trouble crawling your site.”

The search engine has, however, evolved dramatically since those early days, and is more than capable of fetching and rendering pages in their complete form. The new guidelines, however, advise webmasters to do the complete opposite. In order for Google to get a better understanding of a site’s quality, its spiders need to enter it’s every nook and cranny.

“To help Google fully understand your site’s contents, allow all of your site’s assets, such as CSS and JavaScript files, to be crawled. The Google indexing system renders webpages using the HTML of a page as well as its assets such as images, CSS, and JavaScript files. To see the page assets that Googlebot cannot crawl and to debug directives in your robots.txt file, use the Fetch as Google and the robots.txt Tester tools in Webmaster Tools.”

In fact, some SEO firms even remember similar warnings from Google about blocking such features as far back as 2012.

The timing of releasing the newest alert is no accident either, as it comes out in close tandem with significant quality updates such as Panda, Mobilegeddon, and even the still mysterious Phantom. CSS and Java files often dictate the quality of the responsive design elements of a website. Blocking them would cause the site to lose points with algorithms that place responsiveness as a priority, such as the three mentioned above.

Now, to be clear, these warnings aren’t indicative of an incoming penalty for websites that don’t follow suit. It’s still the webmaster’s choice whether or not to unblock CSS and JavaScript files, without the threat of a heavy consequence hanging over their heads. But, preventing Google from seeing the site without the input of such features will create an incomplete picture of its quality.

This is a lot like looking at a jigsaw puzzle with a few pieces missing; it’s not bad per se, but you’re not exactly sure what the picture is. These missing pieces prevent Google from appreciating the actual quality status of the site, and will cause it to place it lower on keyword rankings.

Asking webmasters to unblock CSS and Java is in accordance with Google’s mission of providing users with valuable search experience.

The most common targets of the alerts seem to be WordPress websites that have setups that specifically block CSS and Java files. WordPress sites often block scripts like wp-includes/folder and wp-content/folder in the robots.txt with a disallow setup. This will create problems for crawlers as such folders include images, plugins, and themes that are essential in rendering the sites.

Fortunately, there are several simple ways to remedy the website’s situation if it received an alert on the Google Search Console. Google laid out instructions that webmasters can follow in the event that they need to unblock and test their sites for the next Google crawl within the alert.

The process has three different steps: identifying the blocks, updating the robots.txt file, and validating the fix. But, there is a shortcut that websites can take to expedite the process – at least for the block identification step.

The complex part of identifying blocked files – in this context – is determining the reason why Google sent an alert. The program merely detects blocked files, but doesn’t distinguish between CSS or Java blocks, and third party blocks that Google has already stated wouldn’t affect websites negatively.

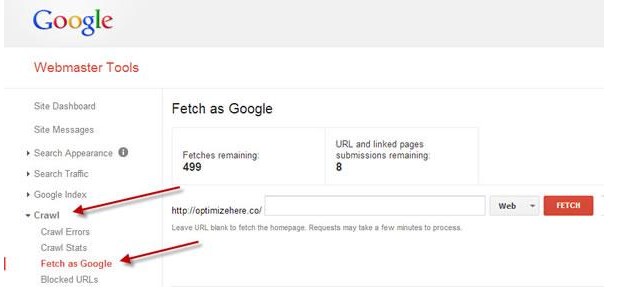

Under the Google instructions, a webmaster will have to perform a fetch and render test under the Crawl Section of the Google Search Console. Webmasters will then have to view the discrepancy between the way Googlebot and a browser is rendering the URL.

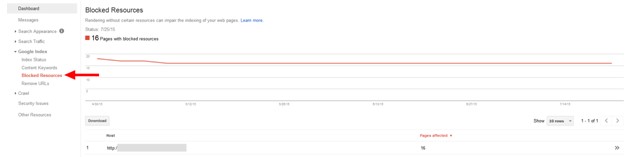

A much easier method is using the tab directly over the Crawl section. Google Search Console has a section under Google Index, labelled as “Blocked Resources.”

Clicking just one URL on this page will allow webmasters to see how many other pages each of these blocked resources are blocked on other pages of the site. This is a great way to determine whether the blocks are something webmasters should be concerned about, or are just false positives.

There aren’t any shortcuts, however, for the next two steps and webmasters would do well to follow Google’s instructions for the rest of the way.

Past warnings from Google may have fallen on deaf ears, but webmasters would do well to heed the warnings this time. Panda, Phantom, and Mobilegeddon (and even Pigeon) continue to wreak havoc on websites that were too stubborn to make changes in the quality of their content and the responsiveness of their website.

If you’re looking for a reliable partner in not just improving the status of your website, but protecting it from the negative effects of algorithm updates as well – contact us immediately. Our teams keep a finger on the pulse of SEO, and are confident in anticipating Google’s next moves. This way, you’ll enjoy all the benefits, and avoid all the pitfalls.

Hey there, anything we can assist with?